Excellent responses @jwulf. Lots of good information all in one place. We have always gone on the assumption that BPMN processing is not free but good to have an approximate number.

Some additional notes.

While thinking about performance, you may also want to note that clustering Zeebe is mostly about achieving fault tolerance and throughput in terms of how many workflow instances you complete in a certain amount of time. The number of workflow instances which can be started is not really a realistic and good measure of performance as creating and starting workflow instances only for them to fail or not complete is not useful. Clustering comes with the overhead of managing nodes, partitioning and replication which takes away CPU cycles from actually executing workflow instances. In other words, it is not free which is why you should not expect an increase of x times the number of workflow instances completed if you increase your broker count by x. Expect less than x and be happy with that ![]()

In terms of raw performance on how fast workflow instances complete, this will depend on several factors including broker load. But I would say if you assume a worse case scenario on broker load to deal with the additional overhead of clustering, partitioning, replication, etc, overall workflow complexity and executing time for each of the jobs being executed by a worker will carry a greater weight in this equation. However, having a fast machine allows things to get done quicker.

I like equations here are some guidelines we use:

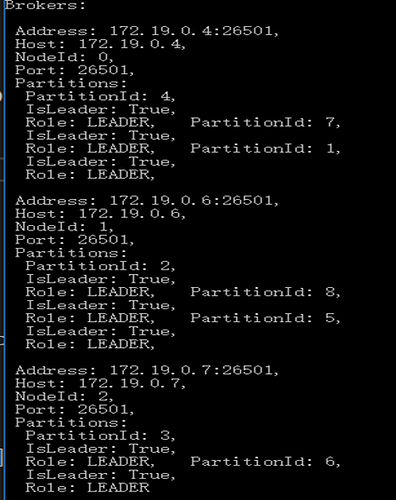

broker load = function (number of partitions + replications). If you anticipate creating lots of workflow instances to be started (the burst scenario) and are not overly worried about how fast workflow instances complete as some jobs take a long time, be prepared to scale Zeebe horizontally.

number of workflow instances completed = function (broker load + flow complexity ). Same argument. If you are concerned about number of workflow instances that can be started, such as dealing with burst, again horizontal scaling of Zeebe is a good thing.

execution time per workflow instance to complete = function (broker load + complexity of workflow). If you want workflow instances to complete relatively quickly, then deploy your broker on beefy machines and ensure your jobs are not taking a long time. Also pay attention to variable sizes.

Summarizing notes for best practices:

- Keep workflow logic relatively straightforward and not very complex if possible.

- Always think about the size of workflow variables and strive to keep workflow variables relatively small. Large documents incur a serialization hit, not to mention storage space. Think of the performance hit during replication as well.

- Fetch only those variables needed in each workflow step. So leverage the fetchVariables API as in:

.client.newWorker().jobType(“some-type”).fetchVariables(“only,those,you,need”)

- Keep jobs relatively simple and ensure they return quickly, if possible. Small quick jobs, while apparently providing more chatter and create more events, allow for better areas of visibility and optimization and frees RocksDb from having to maintain many incomplete instances which also is a price to pay during replication.

- For broker hardware, use the beefiest machine you can afford. Including CPU and fast memory. CPU speed will allow Zeebe to do things quicker. Fast memory goes without saying, however, sufficient capacity will allow a broker to be able to save more state which means processing more workflow instances.