Maybe… We still don’t have a concrete use case to assess. Do you have one in mind?

All engineering is a cost/benefit analysis.

A lot of the surface area for failure is reduced in the Zeebe architecture, and a number of failure mode retries are handled by the broker.

The whole point is that by adopting the Zeebe architecture, a lot of that is taken care of. Yes, there is still the possibility of failure - but most of the scenarios involve failure modes that are not obviously best addressed by adding automated gRPC retry.

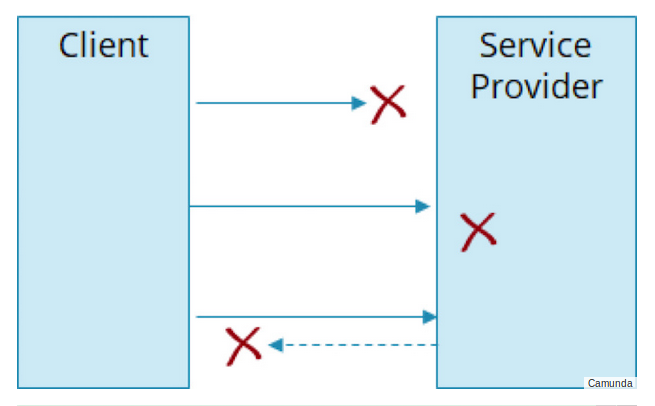

Yes, the network is unreliable. For example, the broker may go away. It’s not a foregone conclusion that the best solution to that is automated retry. In many cases of that level of failure, it will be a DevOps operation to bring it back (including, potentially, Google Cloud Platform engineers trying to bring their availability zone back) - and the issue that we are solving for is how the state of the business process is retained through the failure and reinstated when the component / connection is restored. And also what happens to pressure in the system when this happens.

When the state lives nowhere and everywhere, as it does in peer-to-peer choreography, then failure in a component threatens the business process in a particular way - you need to keep the state alive where it is, and retry is essential. So, in that architecture the benefit is clear, and the cost is clearly worth paying.

I did some more investigation of the engineering effort involved in adding retry to the JavaScript library. It looks like it would involve implementing clientInterceptor calls for the grpc-node library in the node-grpc-client library, which is downstream of node-grpc and upstream of zeebe-node.

The fact that they haven’t implemented it already is an indication (not proof) that it is not needed in practice sufficiently to have driven its development. I would be implementing it upstream without a use-case downstream driving it - which makes the specific implementation speculative in both its necessity and the form it should take.

I talked it over with one of the main users of zeebe-node, and at the moment, in their use-case, the rate of that failure mode and its impact isn’t sufficient to warrant it. They persist state at boundaries and use explicit retry to protect against memory pressure. If the broker fails, the last thing you want is cascading failure as memory is exhausted. Then you will lose all in-memory state. Zeebe itself is designed internally to protect against this happening within the broker boundary through an append-only event log on disk plus replication across multiple nodes.

There are other areas of the system, and other recovery modes that are more appropriate in a Zeebe system.

That’s not to say that some other failure mode may emerge where retry makes sense - but at the moment it looks like you won’t be manually coding retries at that level. When a call fails, some component is in a hard failure mode, and there is no state for you to retain in the service.

One exception to this that I can see is on the boundary of the system where you trigger workflows from an external system. Long-running processes there may hold the state of the business process in memory and retry operations over a long period of time while they wait for the broker to come back.

Personally, I would put “state outside the engine that cannot be lost” in a database or queue and explicitly retry with business logic.

Again, I’m open to doing the engineering to put automated retry in the Node library, but it would need to be driven by an actual use-case, balanced against other ways to handle the failure mode.

So if you actually need it, I’m happy to write it.

And: thanks for generating this conversation! This is an aspect that I hadn’t looked into at this level of detail.